explicit in Å. In principle the sensor itself has a transfer function unequal to 1 so that this

should be inserted as an extra block in the feedback just before the sensor noise addition.

However, a good quality sensor has a at frequency response for a much broader band than

the process transfer. In that case the sensor transfer may be neglected. Only in case the

sensortransfer is not sufficiently broadbanded (easier to manufacture and thus cheaper)

a proper block has to be inserted . In general one will avoid this because the ultimate

control performance highly depends on the quality of measurement: the resolution of the

sensor puts an upper limit to the accuracy of the output control as will be shown.

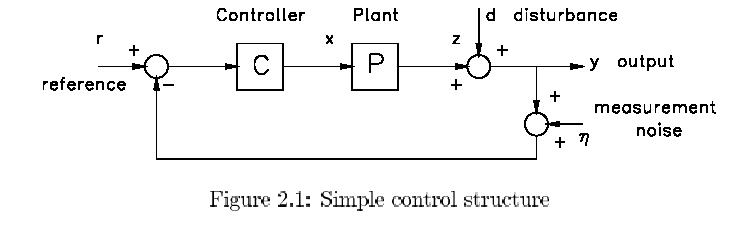

The process or plant (the word "system" is more or less reserved for the total, controlled

structure) incorporates the actuator. The same remarks as made for the sensor hold for

the actuator. In general the actuator will be made sufficiently broadbanded by proper

control loops and all possibly remaining defects are supposed to be represented in transfer

P. Actuator disturbances are combined with the output disturbance d by computing or

rather estimating its effect at the output. Therefor one should know the real plant transfer

Pt consisting of the nominal model transfer P plus the possible, additive model error ḃP.

As only the nominal model P and some upper bound for the model error ḃP is known, it

is clear that only upper bounds for the equivalent of actuator disturbances in the output

disturbances d can be established. The effects of model errors (or system perturbations) is

not yet made explicit in Fig. 2.1 but will be discussed later in the analysis of robustness.

Next we will elaborate on various common control constraints and aims. The con-

straints can be listed as stability, robust stability and avoidance of actuator saturation.

Within the freedom, left by these constraints, one wants to optimize, in a weighted balance,

aims like disturbance reduction and good tracking without introducing too much effects of

the sensor noise and keeping this total performance on a sufficient level in the face of the

system perturbations, i.e. performance robustness against model errors. In detail:

Stability. Unless one is designing oscillators or systems in transition , the closed loop

system is required to be stable. This can be obtained by claiming that nowhere in the

closed loop system some finite disturbance can cause other signals in the loop to grow to

infinity: the so-called BIBO-stability from Bounded Input to Bounded Output. Ergo all

corresponding transfers have to be checked on possible unstable poles. So certainly the

straight transfer between the reference input r and the output y given by :

y = PC(I + PC)-1r (2.1)

But this alone is not sufficient as, in the computation of this transfer, possibly unstable

poles may vanish in a pole-zero cancellation. Another possible input position of stray

signals can be found at the actual input of the plant additive to what is indicated as x

(think e.g. of drift of integrators). Let us define it by dx. Then also the transfer of dx to

say y has to be checked for stability which transfer is given by:

y = (I + PC)-1Pdx = P(I + CP)-1dx (2.2)

Consequently for this simple scheme we can distinguish four different transfers from r and

dx to y and x, because a closer look soon reveals that inputs d and Å are equivalent to r

and outputs z and u are equivalent to y.

Disturbance reduction. Without feedback the disturbance d is fully present in the

real output y. By means of the feedback, the effect of the disturbance can be influenced

and at least be reduced in some frequency band. The closed loop effect can be easily

computed as read from:

The underbraced expression represents the Sensitivity S of the output to the disturbance

thus defined by:

S = (I + PC)-1 (2.4)

If we want to decrease the effect of the disturbance d on the output y we thus have to

choose controller C such that the sensitivity S is small in the frequency band where d has

most of its power or where the disturbance is most gdisturbing".

Tracking. Especially for servo controllers but in fact for all systems where a reference

signal is involved ,there is the aim of letting the output track the reference signal with a

small error at least in some tracking band. Let us define the tracking error e in our simple

system by:

Note that e is the real tracking error and not the measured tracking error observed as

signal u in Fig. 2.1, because the last one incorporates the effect of the measurement

noise substantially differently. In equation 2.5 we recognize ( underbraced) the sensitivity

as relating the tracking error to both the disturbance and the reference signal r. It is

therefore also called awkwardly the ginverse return difference operator". Whatever the

name, it is clear that we have to keep S small in both the disturbance and the tracking

band.

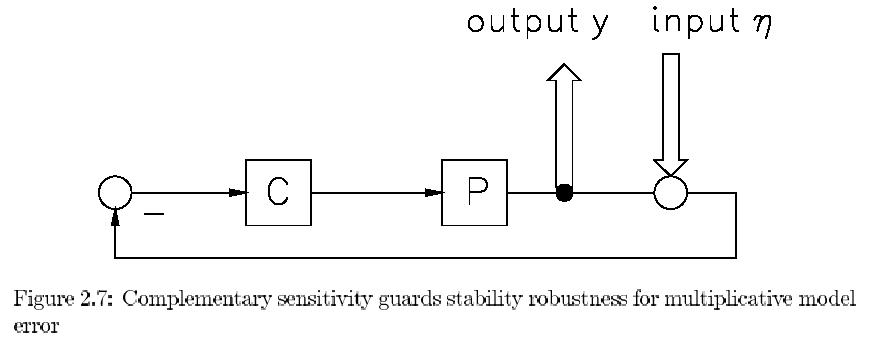

Sensor noise avoidance. Without any feedback it is clear that the sensor noise will

not have any influence on the real output y. On the other hand the greater the feedback the

greater its effect in disrupting the output. So we have to watch that in our enthusiasm

to decrease the sensitivity, we are not introducing too much sensor noise effects. This

actually reminiscences to the optimal Kalman gain. As the reference r is a completely

independent signal, just compared with y in e, we may as well study the effect on the

tracking error e in equation 2.5. The coefficient (relevant transfer) of Å is then given by:

T = PC(I + PC)-1 (2.6)

and denoted as the complementary sensitivity T. This name is induced by the following

simple relation that can easily be verified:

S + T = I (2.7)

and for SISO (Single Input Single Output) systems this turns into:

S + T = 1 (2.8)

This relation has a crucial and detrimental influence on the ultimate performance of the

total control system! If we want to choose S very close to zero for reasons of disturbance

and tracking we are necessarily left with a T close to 1 which introduces the full sensor

noise in the output and vice versa. Ergo optimality will be some compromise and the

more because, as we will see, some aims relate to S and others to T.

Actuator saturation avoidance. The input signal of the actuator is indicated by x

in Fig.2.1 because the actuator was thought to be incorporated into the plant transfer P.

This signal x should be restricted to the input range of the actuator to avoid saturation.

Its relation to all exogenous inputs is simply derived as:

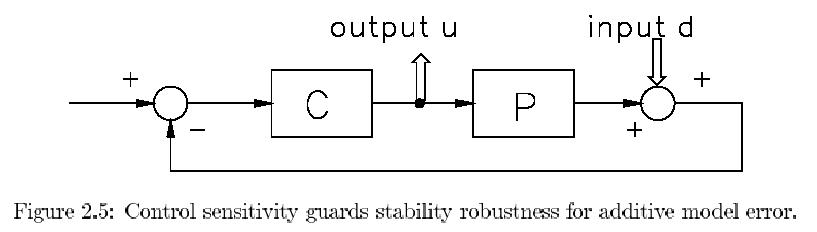

The relevant (underbraced) transfer is named control sensitivity for obvious reasons and

symbolized by R thus:

R = C(I + PC)_1 (2.10)

In order to keep x small enough we have to make sure that the control sensitivity R is small

in the bands of r, Å and d. Of course with proper relative weightings and gsmall" still to

be defined. Notice also that R is very similar to T apart from the extra multiplication by

P in T. We will interpret later that this P then functions as a weighting that cannot be

influenced by C as P is fixed . So R can be seen as a weighted T and as such the actuator

saturation claim opposes the other aims related to S.

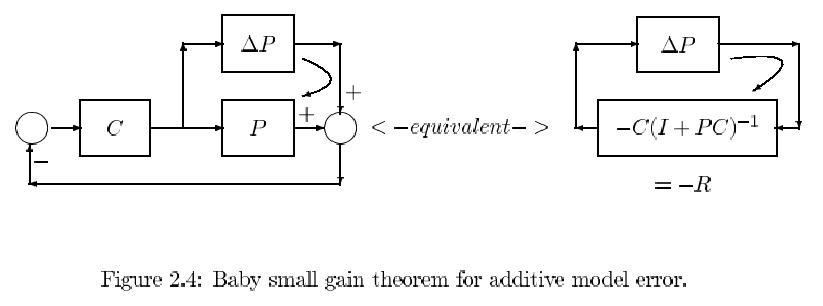

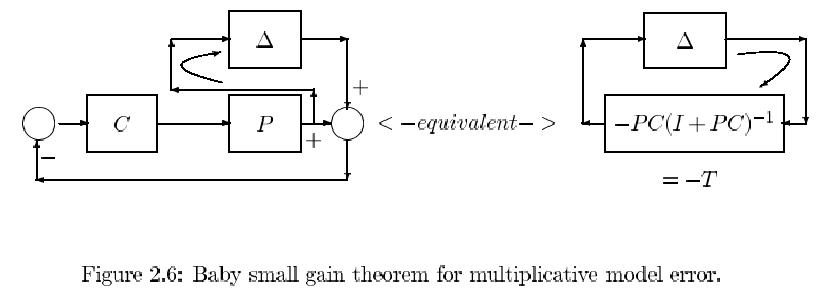

Robust stability. The whole concept is based on the so-called small gain theorem

which trivially applies to the situation sketched in Fig. 2.2 . The stable transfer H repre-

sents the total looptransfer in a closed loop. If we require that the modulus (amplitude)

of H is less than 1 for all frequencies it is clear from Fig. 2.3 that the polar curve cannot

encompass the point -1 and thus we know from the Nyquist criterion that the loop will

always constitute a stable system. So stability is guaranteed as long as:

gSup" stands for supremum which effectively indicates the maximum. (Only in case that

the supremum is approached at within any small distance but never really reached it is

not allowed to speak of a maximum.) Notice that we have used no information concerning

the phase angle which is typically H. In above formula we get the first taste of H by

the simultaneous definition of the infinity norm indicated by ||E||.

All together, these conditions may seem somewhat exaggerated, because transfers, less

than one, are not so common. The actual application is therefore somewhat gnested" and

very depictively indicated as gthe baby small gain theorem" illustrated in Fig. 2.4. In the